Neurotech monthly. February-June 2024

Stimulation and cognitive abilities, encoding and false memories, flexible/injectable electrodes of shapes and forms, printing electronics on the cranium, intrinsic and input-driven neural dynamics, mental imagery and silent speech, neurotech and international security, 13 funding announcements

Hello!

February to June 2024 Astrocyte* newsletter is here. Sorry for not being consistent with publishing, it results from busy work schedule at e184 and family commitments.

Below are also a few things I'm working on. Subscribe to get these notes and a monthly newsletter into your mailbox.

Soon to be published/what has been published:

- A note on Synchron, an endovascular brain-computer interface - November 2023 - moved to September 2024 (yeah, moved again 🤦♂️, but almost done!);

- An updated version of the BCI startup landscape - December 2023 - moved to October 2024.

- A note from EMBC 2024 - December 2024.

Please share and subscribe if you liked it.

Table of Contents

I. Reading List

- Non-Invasive - two studies on how non-invasive (fUS and VNS) stimulation improves cognitive performance; closed-loop TMS; fUS to increase local attention and filter-out external information;

- Invasive/Minimally Invasive - false memory in hippocampus & neural prosthetic to facilitate human memory encoding and recall; inner speech decoding; self-stiffening intracortical microprobe; flexible electrodes implantable via <2mm hole; flexible electrodes spanning multiple brain areas; <15 µm electrodes in visual cortex; directly printing electronics on the cranial surface; optical probes; spinal cord stimulation to convey functionally unrelated sensory input to the brain;

2) Data Science - a model for intrinsic and input-driven neural population dynamics; a foundation model for SEEG/EEG; mental imagery decoding from fMRI; LLMs applied to EMG data for silent speech reconstruction.

3) Misc Neurotech Reading - feedback loops between BCI commercialisation and research acceleration and between AI and neuro research fields; Petavoxel fragment of human cortex and brain organoids; A blue-print of how neurtoech may move to the clinical frontline, based on the TMS' adoption trajectory; Payers, social consensus, and non-tech BCI challenges. Neuroethics & neurorights.

1) Business Reading - Neurotech and international security, what at the macro/country level affect neurotech adoption; a story of a bionic eye startup; vertical integration in the BCI world.

2) Funding - EEG-powered for hearing test, consumer/productivity applications; Electrical stimulation against depression, gait and balance deficit, headaches, for better sleep, and menstrual pain. EMG for consumer devices and prosthesis. Light and sound for depression and sleep.

Twitter handles of the authors provided after some papers are not in a particular order; based on my ability to identify authors by handles, let me know if I missed anyone.

I. Reading List 📚

Techstack/Hardware/Wetware 🧠💿🧫

Non-invasive 🎧

Modulating brain networks in space and time: multi-locus transcranial magnetic stimulation. An overview of multi-locus transcranial magnetic stimulation (mTMS), where a ' ... a set of multiple coils, or a coil array, is used to enable the stimulation of different cortical target sites without moving the coils. The targeting is based on firing multiple coils simultaneously, with different pulse combinations stimulating different cortical locations.' The tech resonates with how I see a successful BCI - frictionless, closed-loop, real-time. Probably a missing component is increased mobility. Here is more about closed-loop side of things:

This technology makes it possible to define spatiotemporal pulse sequences able to excite multiple nodes of functional networks with any desired order and precise timing of the pulses, replacing the single-locus, predetermined stimulation paradigms of conventional TMS. ... a major paradigm shift in the possibility of controlling these spatiotemporal sequences with a closed-loop approach—a computer algorithm adjusting the treatment or study based on real-time feedback from electroencephalography (EEG), electromyography (EMG), or other recordings.

X: @MutanenTuomas, @vittomeg, @MarzettiLaura, @TimoRoine, @MattiStenroos, @ulfziemann1

Nanobubble-actuated ultrasound neuromodulation for selectively shaping behavior in mice. Ultrasound challenges: low frequancies are required to penetrate the skull safely, so diffraction-caused spatial precision lies between a millimeter and a centimeter; the acoustic heterogeneity and erratic physical features of diverse skulls; brain's inherent sensitivity to ultrasound that is unevenly distributed in different areas.

Workarounds to localise ultrasound via secondary stimulus: sonogenetics, laser-induced ultrasound, and nanoparticle-based ultrasound stimulation.

Nanoparticle-mediated techniques generally rely on nanomaterials to convert a remotely-transmitted primary stimulus (light, US, magnetism, etc) to a localized secondary stimulus at the nanomaterial-neuron interface. ... In this paper, we present nanobubbles with optimized surface properties (PEGylated gas vesicles, PGVs), as ultrasonic actuators for localized neural stimulation in an acoustic field.

Long-lasting forms of plasticity through patterned ultrasound-induced brainwave entrainment. Low-intensity, low-frequency ultrasound stimulation (LILFUS) was applied to mice, and researchers established:

... specific ultrasound parameters designed to mimic the brainwave patterns of theta and gamma oscillations observed during learning and memory processes. By entraining brain activity and replicating the oscillatory patterns associated with cognitive processes, we were able to induce predictable and long-lasting changes in brain function.

... When the researchers delivered ultrasound stimulation to the cerebral motor cortex in mice, they observed significant improvements in motor skill learning and the ability to retrieve food. ...researchers were even able to change the forelimb preference of the mice.

This is very interesting, and further research may bring us closer to one of the 'BCI killer features', when the tech will allow users to learn faster.

Ultrasound modulation improves performance of tasks using an EEG-based BCI. fUS modulation of the geometric center of V5 reduced error in a BCI speller task under Distraction. Euclidean error, calculated by assessing the spatial distance between the target letter and the typed letter, with fUS stimulation (N = 25 subjects/356 trials; mean error = 13.3 ± 18.4%) was significantly lower than those of the non-modulated (N = 25 subjects/351 trials; mean error = 15.5 ± 18.7%; padjusted < 0.01). See - Transcranial focused ultrasound to V5 enhances human visual motion brain-computer interface by modulating feature-based attention.

... our results suggest that the mechanism of action of tFUS in our BCI paradigm is two-fold: (1) it increases local attention by increasing the theta power and (2) it filters out external information by boosting alpha power.

This is an interesting approach of improving non-invasive BCI not through improving its capabilities, but by making the system it operates on, the brain, better prepared to engage with the BCI. It reminds me an approach of changing the environment to make operating on the road easier for self-driving cars. In addition to improving capabilities of the car itself (better sensors, better ML, etc.), the idea is to introduce dedicated lanes, for example, to make the environment match the current capabilities of the autonomous car.

Another interesting work on cognitive abilities - Transcutaneous cervical vagus nerve stimulation improves sensory performance in humans: a randomized controlled crossover pilot study. It showed that transcutaneous cervical vagus nerve stimulation (tcVNS), relative to sham stimulation, improved auditory performance by 37% (p = 0.00052) and visual performance by 23% (p = 0.038).

Researchers '...conducted three sham-controlled experiments, each with 12 neurotypical adults, that measured the effects of transcutaneous VNS on metrics of auditory and visual performance [namely auditory gap discrimination and visual letter discrimination tasks ], and heart rate variability (HRV).'

Notably...

... in the present study in humans, continuous VNS generated rapid and steady sensory benefits during stimulation delivery that generalized across different experiments, sensory tasks, and stimulus properties. In the current study, we randomized stimuli across trials (i.e., different letters and tone frequencies in the visual and auditory tasks, respectively) to minimize learning, and still observed tVNS-evoked improvements.

So, in this study, we are talking about 'on-demand' improvement, not longer-term learning, as, for instance, in the ultrasound study mentioned above.

Invasive/Minimally Invasive 🧑⚕️ 🏥

Developing a hippocampal neural prosthetic to facilitate human memory encoding and recall of stimulus features and categories. Electrical stimulation of the hippocampus is delivered invasively in patient-specific and image category-specific patterns to fourteen patients.

The results of this study indicate that fixed pattern hippocampal stimulation is a viable approach for altering retention of specific information content in human subjects. MDM [memory decoding model] stimulation altered memory in nearly a quarter of the instances, with a nearly 2 to 1 ratio in increase to decrease across all patients when Match Stim was used, and a 9 to 2 ratio in Impaired memory patients that received Bilateral stimulation. ... but refining of training for the MDM model and accuracy of derived codes is needed prior to this approach being able to be ready for use in a neural prosthetic.

X: @HMungerClary, @dongsong, @ThingyInBrainy

While reading around the paper from above, I came across a 2013 paper, Creating a false memory in the hippocampus. A great overview of this paper by @analog_ashley is here. The key findings:

... different contexts (“A” or “C”) activate different populations of cells in the dentate gyrus [of the mice]. ... If you artificially stimulate context-A-related cells in an entirely different context — namely, B — while fear conditioning them (shocking their feet), the mice start to freeze in context A; in other words, they are now afraid of context A despite nothing bad having happened there.

Speech is probably wider researched compared to memory. A new avenue of speech decoding research is supramarginal gyrus (SMG). Representation of internal speech by single neurons in human supramarginal gyrus demonstrates:

... a decoder for internal and vocalized speech, using single-neuron activity from the SMG. Two chronically implanted, speech-abled participants with tetraplegia were able to use an online, closed-loop internal speech BMI to achieve on average 79% and 23% classification accuracy with 16–32 training trials for an eight-word vocabulary.

X: @sarah_wandelt, @davidbjanes

Really excited to finally get this out! @sarah_wandelt https://t.co/xMqCeAqRi8

— David Bjånes (@davidbjanes) May 24, 2024

A self-stiffening compliant intracortical microprobe - the paper addresses the challenge of developing a microprobe stiff enough to penetrate the brain surface but soft enough not to damage it and activate the brain’s immune system. The authors iterate on their previous design and propose a self-stiffening compliant intracortical microprobe that allows for:

... instant switching between stiff and soft modes [that] can be accomplished as many times as necessary to correct positioning errors and minimise tissue damage during accurate positioning.

Flexible polymer electrodes for stable prosthetic visual perception in mice. Interesting work on miniaturisation '... highly flexible, thin polyimide shanks with several small (<15 µm) electrodes during electrical microstimulation of the... primary visual cortex (area V1) of mice.'

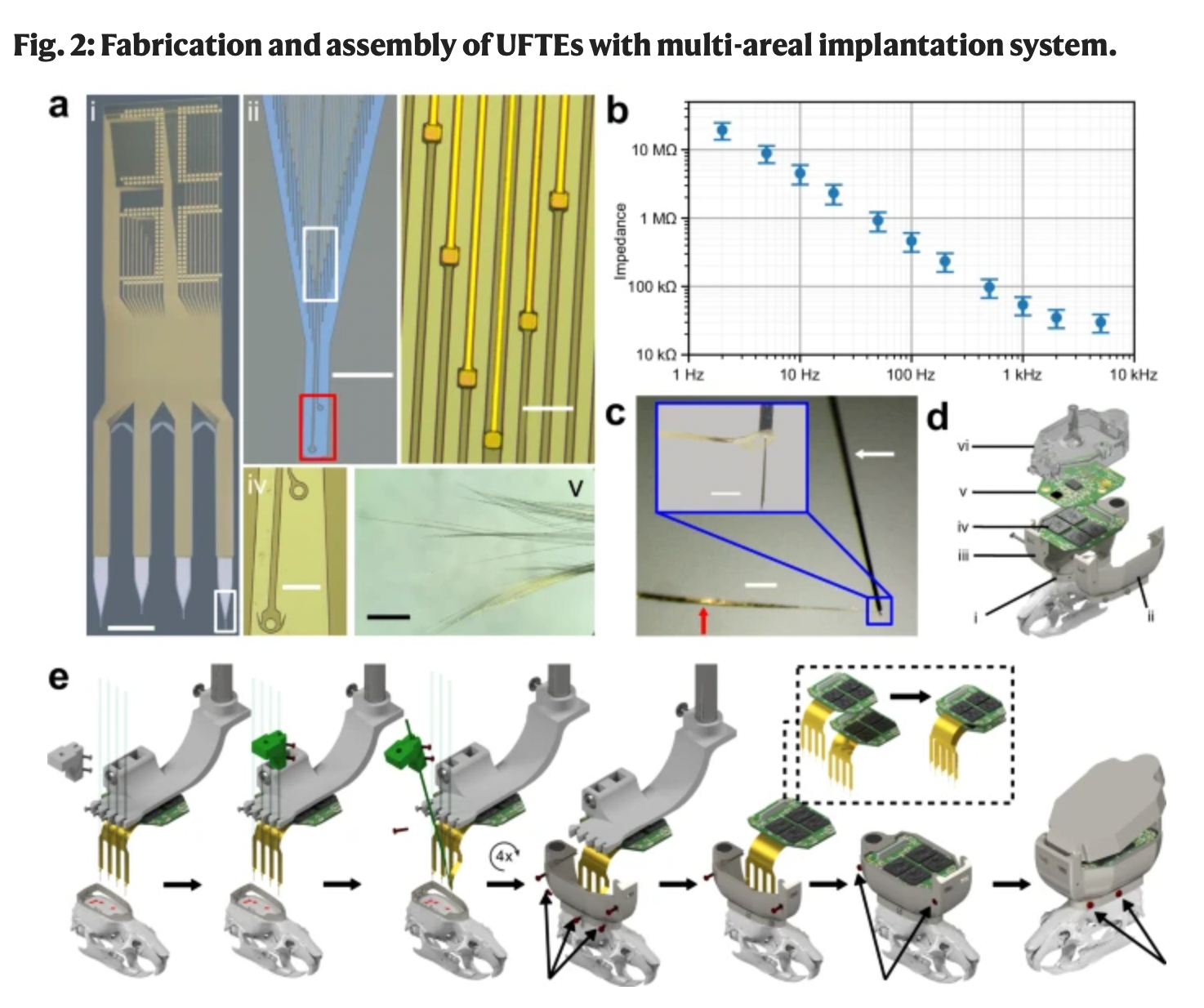

Months-long tracking of neuronal ensemblesspanning multiple brain areas with ultra-flexible tentacle electrodes the paper address a challenge of long-term recording from multiple brain areas at a single-neuron resolution. This is required for both, studying complex behaviours, and in my opinion for a wider adoption of BCIs. For example, due to limited coverage, invasive systems has not been actively used for mental imagery decoding as this activity is more distributed more than speech.

The paper presents the following system:

... mechanically uncoupled, ultra-flexible polyimide [sub-cellular-sized electrode] fibers that self-assemble into bundles to efficiently pack many recording contacts in a small footprint.

... Fibers are held together with a biodegradable glue during insertion into the brain. ... The silk glue holds only the electrode fibers together for each bundle but is not used to connect the bundle to the insertion shuttle. Instead, we mechanically couple the bundle of many fibers to the insertion shuttle... Mechanical coupling to a thin yet stiff insertion shuttle enables reliable insertion of the electrode bundle with many independent fibers into the brain and the immediate removal of the insertion shuttle without waiting for the glue holding the fibers to dissolve.

... The small size and ultra-flexibility of the UFTE fibers remaining in the brain after the removal of the insertion shuttle allow them to interdigitate with the neuronal structures, resulting in months-long stable recordings of single units. ...

Chronic electrophysiological recordings were performed in two male mice.

Hard work of Baran, Peter, Wolfger https://t.co/LYwNlfMqV4. It took a while to establish everything in house, but more to come soon. Special thanks to our reviewers. https://t.co/0cJrgr0Gcf

— Mehmet Fatih Yanik (@mfyanik) June 8, 2024

Months-long tracking of neuronal ensembles spanning multiple brain areas with… pic.twitter.com/d4feMjsz9X

Fully flexible implantable neural probes for electrophysiology recording and controlled neurochemical modulation. The paper describes '... a novel, flexible, implantable neural probe capable of controlled, localized chemical stimulation and electrophysiology recording.' One of the aims was to overcome challenges with fluidic delivery methods (drug leakage, tip clogging, and reflux, additional hardware, such as pumps and valves and a larger system size).

In contrast to fluidic drug delivery devices, electrically controlled drug delivery releases only the drug of interest without the need for an artificial solvent. This dry delivery technique allows a larger amount of drug release with near-constant local pressure. ... This neural probe is designed to implant into different brain regions of Sprague Dawley rats and record the neural activities corresponding to chemical stimulation. The length of the probe shank is designed to be 10 mm so that it can cover cortical laminae and, at the same time, reach deep brain nuclei.

Very flexible mesh, used as a base for electrodes, allows to create '... an implantable multi-modal sensor array that can be injected through a small hole in the skull and inherently spread out for conformal contact with the cortical surface...' Injectable 2D material-based sensor array for minimally invasive neural implants describes a system that could be implanted via a skull hole of 1–2 mm in diameter into the subdural space between the dura mater and the arachnoid mater and unfolds there.

A very different approach is offered in In-vivo integration of soft neural probes through high-resolution printing of liquid electronics on the cranium, where electronics is '... directly printed on the cranial surface. The high-resolution printing of liquid metals forms soft neural probes with a cellular-scale diameter and adaptable lengths'. In this case, a proper craniotomy is required. But among the advantages of this approach are:

1) Ability to print probes with various lengths and a fine diameter of 5 μm, can be adjusted to various depths and locations of the brain; 2) Electrical interconnections and subsidiary electronics are directly printed, the integrity of electronics to biological systems increases; 3) Probes and electronics are integrated as neural interface systems with adaptable geometries allowing for a diversified structural design of the system.

Conversion of a medical implant into a versatile computer-brain interface. In this paper, the researchers '... set out to develop a general-purpose CBI [computer-brain interface] paradigm that allows widely available and safe medical implants as a central information entry point to support diverse clinical applications.'

Among other things, I liked the idea of a general-purpose interface that:

[does] not aim to emulate natural neural signals and re-create naturalistic input, but rather make use of the brain’s ability to learn to decode quasi-arbitrary patterns coming from a functionally unrelated sensory input channel.

This paper considers spinal cord stimulation (SCS) a promising hardware platform for CBI applications.

18 participants received a spinal computer-brain interface and performed tasks (rhythm discrimination, Morse-decoding, two different balance/body-posture tasks) based on information communicated exclusively through electrical messages sent into the individual’s spinal cord.

The paper showed '... that SCS can be used to effectively convey a diverse range of information, from rhythmic cues to artificial sense of balance.' 'In each task, every participant demonstrated successful performance, surpassing chance levels.'

A key to assembling materials on the surface of live neurons focuses on building polymers from their parts within biological tissue. The process looks like the following (a description by @grace_huckins):

... to genetically engineer the neurons so that they produced an enzyme that could catalyze polymerization reactions. Then, when the neurons were bathed in polymer components, the enzymes embedded in their membranes transformed the components into a cohesive material.

The challenge was how to put the enzyme in the right place. CD2 is a protein from the surface of the immune system’s T cells. CD2 efficiently shuttles an enzyme to its target location on the cell’s surface and can be leveraged to build minimally invasive brain interfaces.

X for the original paper by @ZhangAnqi where researchers separate intrinsic neural dynamics that underlie behaviour from other brain regions' neural inputs and sensory/measured inputs.

Happy to share our work on genetically targeted chemical assembly specifically localized to neuron membranes in @ScienceAdvances today. Thank my advisors @KarlDeisseroth and @zhenanbao for their tremendous support! https://t.co/CcXwQSJ4AR

— Anqi Zhang (@ZhangAnqi) August 9, 2023

An asynchronous wireless network for capturing event-driven data from large populations of autonomous sensors. The neural dust concept is moving closer to reality. As researchers developed a '... a wireless communications system that mimics the brain using an array of tiny silicon sensors [300 by 300 micrometers each], each the size of a grain of sand. The researchers hope that the technology could one day be used in implantable brain-machine interfaces to read brain activity.' (an article by @gwendolyn_rak).

Another emerging modality is optical. An optoelectronic implantable neurostimulation platform allowing full MRI safety and optical sensing and communication describes a system where:

The wires usually used to convey electrical current from the neurostimulator to the electrodes are replaced by optical fibers. Photovoltaic cells at the tip of the fibers convert monochromatic optical energy to electrical impulses. Furthermore, a biocompatible, implantable and ultra-flexible optical lead was developed with a custom optical fiber.

Data Science 💻🧮

MindEye2: shared-subject models enable fMRI-to-image with 1 hour of data. An attempt to build a model that does not require training data of dozens of fMRI hours for each individual subject.

The present work showcases high-quality reconstructions using only 1 hour of fMRI training data. We pretrain our model across 7 subjects and then fine-tune on minimal data from a new subject. Our novel func- tional alignment procedure linearly maps all brain data to a shared-subject latent space, followed by a shared non-linear mapping to CLIP image space. We then map from CLIP space to pixel space by fine-tuning Stable Diffusion XL to accept CLIP latents as inputs instead of text.

I liked the granularity of this paper's approach - Modeling and dissociation of intrinsic and input-driven neural population dynamics underlying behavior (Here is a summary). Researchers separate intrinsic neural dynamics that underlie behaviour from other brain regions' neural inputs and sensory/measured inputs.

We develop an analytical learning method for linear dynamical models that simultaneously accounts for neural activity, behavior, and measured inputs. The method provides the capability to prioritize the learning of intrinsic behaviorally relevant neural dynamics and dissociate them from both other intrinsic dynamics and measured input dynamics.

Having this granularity is required for going from brain-computer interfaces to brain-computer operating systems, where algorithms do not only relay something from the brain (decode letters, words, images, etc.) but regulate brain patterns by optimizing external inputs from stim devices.

X: @Vahidi_Parsa, @MaryamShanechi, @omidsani

🎉New in @PNASNews, we develop a method to disentangle intrinsic & input-driven neural dynamics of behavior.

— Maryam Shanechi (@MaryamShanechi) February 14, 2024

Doing so w/ sensory inputs reveals similar intrinsic behavior-related neural dynamics across subjects/tasks.

👏@Vahidi_Parsa @omidsanihttps://t.co/gk6bZY3Irk

🧵& code⬇️ pic.twitter.com/y0quKKSedK

Brant-2: foundation model for brain signals - an attempt to build a foundation model for brain signals. Among other things, it differs from previous attempts (covered in September 2023 and January 2024 issues) in two interesting ways: 1) it works on two modalities, SEEG and EEG, both use the same principle of electrical activity recording; 2) it covers a variety of downstream tasks - seizure detection, seizure prediction, sleep stage classification, emotion recognition, and motor imagery classification.

The paper outlines why it is so difficult to build foundation models for brain data:

... different data exhibit differences in terms of sampling rates as well as the positions and quantities of electrodes... brain signals collected from different scenarios contain distinct physiological characteristics, leading to varying modeling scales. ... there is substantial diversity among different tasks in the field of brain signals

On the flip side, these challenges are an opportunity for neurotech startups.

Mental image reconstruction from human brain activity: neural decoding of mental imagery via deep neural network-based Bayesian estimation presents a machine learning method for visualizing subjective images (i.e., those not currently seen by a subject) in the human mind based on fMRI brain activity. This was achieved by enhancing one of the previous visual image reconstruction methods by integrating Bayesian estimation and semantic assistance.

X: @NishimotoShinji, @majimajimajima

Our paper on mental image reconstruction from human brain activity, led by Naoko Koide-Majima and @majimajimajima, was published in Neural Networks.

— Shinji Nishimoto (@NishimotoShinji) November 30, 2023

We combined Bayesian estimation and generative AI to visualize imagined scenes from human brain activity.https://t.co/vxBXGYzdBc pic.twitter.com/Oel3hYUegk

A cross-modal approach to silent speech with LLM-enhanced recognition. A new LLM-enchanced architecture that allows use of audio-only datasets like LibriSpeech for training and uses cross-modal alignment through novel loss functions—cross- contrast (crossCon), supervised temporal contrast (supTcon), and Integrated Scoring Adjustmen to improve silent speech recognition.

It was applied to EMG sensor measurements captured on the face and neck during both vocalized and silently articulated speech.

X: @tbenst, @xoxo_meme_queen, @WillettNeuro, @ShaulDr

Our silent speech preprint is live! Using a cross-modal training technique enhanced by LLMs, we set a new state-of-the-art for silent speech (12.2% word error rate, open vocabulary) and brain-to-text (8.9% WER; Rank 1 on Brain-to-Text Benchmark '24) https://t.co/Pwfn1mV1hg pic.twitter.com/8HcbMMZZGt

— Tyler Benster (@tbenst) March 13, 2024

Misc Neurotech Reading 👨💻

Mind-reading devices are revealing the brain’s secrets. This article covers the kind of feedback loop I expect between tech/startups and research. The more we invest and commercialise BCIs, the more science will be accelerated.

Although the main impetus behind the work [developing BCIs] is to help restore functions to people with paralysis, the technology also gives researchers a unique way to explore how the human brain is organized, and with greater resolution than most other methods. ... Results are overturning assumptions about brain anatomy, for example, revealing that regions often have much fuzzier boundaries [e.g. Broca’s area and speech production, the premotor cortex and all four limbs] and job descriptions [retaining the programmes for speech or movement even when paralized] than was thought.

100 years ago, the first EEG recordings in humans were made. Since then, recording neural activity has become more sophisticated

— Miryam Naddaf (@MiryamNaddaf) February 21, 2024

In my latest for @Nature, I asked researchers what brain-computer interfaces can teach us about the brain. https://t.co/Rb0jo66OHi

Another example of BCIs contributing to the understanding of the brain came from researchers who built '... a novel deep learning-based neural speech decoding framework that includes an ECoG decoder that translates electrocorticographic signals' [paper]. They found out that [article]:

[even if] some of the participants only had electrodes implanted on their right hemisphere, providing the researchers no information about the left-hemisphere’s activities. ... they [researchers] were still able to use the information from the right hemisphere to produce accurate speech decoding. ... this reveal how speech is processed and produced by the brain across the two hemispheres, it also opens new possibilities for therapeutic interventions, particularly in addressing speech disorders like aphasia, following damage to the left hemisphere.

New paper out today in @NatMachIntell, where we show robust neural to speech decoding across 48 patients. https://t.co/rNPAMr4l68 pic.twitter.com/FG7QKCBVzp

— Adeen Flinker 🇮🇱🇺🇦🎗️ (@adeenflinker) April 9, 2024

To understand the brain better current mobile neurotech/BCIs is not enough, a much better resolution is required. Various types of microscopy are used on the human brain tissue. Getting this tissue is challenging. A petavoxel fragment of human cerebral cortex reconstructed at nanoscale resolution posits that '... the human brain tissue that is a by-product of neurosurgical procedures could be leveraged to study normal—and ultimately disordered—human neural circuits.',

The authors studied a sample of human temporal cortex, 1 mm3 in volume, extending through all cortical layers, covering thousands of neurons. They performed an electron microscopy, produced 1.4 petabytes data, classified and quantified cell types, vessels and synapses.

We found a previously unrecognized class of directionally oriented neurons in deep layers and very powerful and rare multisynaptic connections between neurons throughout the sample.

X: @alex_shapsoncoe, @michalj, @stardazed0

Our human connectome project is now published; https://t.co/JNaFmbCGbm. Thanks to all contibutors, esp @michalj, D.Berger, @stardazed0 and J.Lichtman.

— Alex Shapson-Coe (@alex_shapsoncoe) May 14, 2024

As Cajal said; “The brain is a world consisting of a number of unexplored continents and great stretches of unknown territory".

Brain organoids (BOs) are an emerging alternative to human tissue, even for now they do not approximate brain tissue architectonics Brain organoids engineered to give rise to glia and neural networks after 90 days in culture exhibit human-specific proteoforms compares '... the profiles of select proteins in human BOs, to profiles in human cortical (Ctx) and cerebellar (Cb) autopsy brain tissues and mouse Ctx tissues. ... demonstrate[s] that banding pattern and protein proportions of several proteins in human BOs are more similar to human Ctx or Cb tissues than mouse Ctx tissues, indicating that the proteins in human BOs are processed in a manner similar to the proteins in the human brain parenchyma.'

X: @TylerJWenzel.

Another type of feedback loops emerge when BCI research connects to AI research. The new NeuroAI covers this topic. Curently, '... as AI research has evolved at a fast pace, progress over recent years has stirred a divergence from this original neuroscience inspiration. [researchers] emphasizes further scaling up of complex architectures such as transformers, rather than integrating insights from neuroscience.'

I can do nothing but strongly agree with the conclusion that '... [t]he upcoming generation of scientists will need to possess fluency in both domains [neuro and AI]...'. And while this article covers research interconnectedness between neuro and AI, I'm also excited about their contributions to each other at the tooling level. Fore example, using brain data to train models or improve labelling.

Brain stimulation poised to move from last resort to frontline treatment. The evolution and adoption of transcranial magnetic stimulation (TMS) provide a possible blueprint for other modalities of transition from last-resort treatments to the frontline. Among the inflexion points for TMS adoption were: 1) change in the paradigm from 'brain as soup' to recognising circuits and '... that disease arose from dysfunction within those circuits.'; 2) hardware advancements that allow flexibility and customisation - 'Continued hardware improvements and new ways of applying magnetic fields have proved crucial: The shape of the pulse applied to the coil, the amplitude, frequency, duration, and other parameters often make the difference in whether patients respond'; 3) precision targeting; 4) faster protocols.

TMS is approaching the frontline treatment role but is not yet there, as understanding how the techniques work at a cellular level remains challenging. For instance, '[w]hat other ways might the neural circuitry be affected? How might stimulation-induced changes influence, say, the blood-brain barrier or neurotransmitters?'. Also, for broad adoption, '... clinicians will have to determine, among other things, who respond best to which treatments.'

Based on this article, I'd suggest neurotech startup founders consider several aspects when evaluating how close to the clinical frontline they might be: 1) is your modality/approach backed by scientific advancements/insights/paradigm shift? 2) does this approach allow flexibility, customisation, and precision? 3) does this approach offer less friction compared to the status quo? 4) what are the 'unknowns', and how can they be addressed? 5) what tools do clinicians use to make decisions about their product?

X: @philipyam

In reporting this story for @PNASNews, I appreciate even more that the brain is an electrical device. But it's still a terrible power source for Matrix-based enslavement. https://t.co/tVelCVZe2B

— Philip Yam (@philipyam) February 13, 2024

What Neuralink is missing covers technological and social challenges associated with BCIs, and concludes that '... right now, we don’t have a social consensus on how to apportion resources such as health care, and many disabled people still lack the basic supports necessary to access society. Those are problems that technology alone will not—and cannot—solve.'

I'd agree that tech can't solve the challenge of resources apportioning. However it could help to create more resources for a society to allocate. For the BCIs specifically, the faster consumer BCIs hit the market, the faster the price of devices will go down and more patients will be able to afford their medical versions. Moreover, medical devices could be directly sponsored by consumer ones. There's defenitly room for improvement of the Facebook's approach to providing free internet connectivity in selected countries, but the precedent is here.

The brain is the most complicated object in the universe. This is the story of scientists’ quest to decode it – and read people’s minds—a great article on neurotech/BCI history and perspective. I particularly liked a section on ethics and this idea of translation between modalities, where something learned via large/expensive devices could be reused with smaller/mobile devices. Here is an example for fMRI and fNIRS:

... fMRI, although non-invasive, is a non-wearable neuro-imaging technique, and it is clear these methods are not set to leave a strictly organised laboratory setting any time soon.

However, the HuthLab researchers suggest that in time, fMRI could be replaced by functional near-infrared spectroscopy (fNRIS) which, by “measuring where there’s more or less blood flow in the brain at different points in time”, could give similar results to fMRI using a wearable device.

Two articles on neuroethics and neuro rights. An editorial in Nature covers how the work with neurosciecne studies participants should change given tech progress (single-cell technologies, AI, etc.) while another one focuses on 'Effects of the first successful lawsuit against a consumer neurotechnology company for violating brain data privacy'

Startup/Corporate News 📰💰

Business Reading

Neurotechnology and international security. Predicting commercial and military adoption of brain-computer interfaces (BCIs) in the United States and China. This research presents a framework that attempts to predict dissemination of neurotechnologies to both the commercial and military sectors. The research covers multiple qualitative and quantitatvie factors that drive BCI adoption, among them:

- innovation system ('the network of institutions in the public and private sectors whose activities and interactions initiate, import, modify, and diffuse new technologies'),

- amount of brain project funding (BRAIN Initiative in the US),

- current BCI market share (in sales and # of patents),

- government structure (democratic-republic, autocratic rule, or another form) and its affect on tech dissemination,

- brain project and military goals (an alignment between research and military, research and applications),

- sociocultural norms (Hofstede’s cultural dimensions),

- research monkey resources.

The US performs better on items 1 to 3 and China wins on 4 to 7. The researchers conclude:

... China is a more likely to be the first adopter of BCI technologies in both the commercial and military sectors because of its government structure, sociocultural norms, and greater alignment of brain project goals with military goals.

In a separate commentary for an article by @emilylmullin, Margaret Kosal, a co-author of the paper mentions that...

The US has not explicitly linked our civilian science with our military research,” she says. “China’s strategy fundamentally links the military and the commercial, and that is why there is concern.

This resonates with my experience, of covering hundreds of neurotech startups and spoting just a handful of those who focus on military applications, for example using fNIRS for 'telepathy' or fUS for pilot performance improvement. I think more startups should pay attention to military applications, as it not only directly helps national security, but will likely spillover to civilian applications as it happended with other technologies, like mobile phones and satellite communications, to name a few.

X: @mekosal, @singer_sci, @emilylmullin

Upcoming publication by Dr Joy Putney @singer_sci from @QBioS_GT & I on understanding how truly #EmergingTech impacts #InternationalSecurity#Neurotechnology #CognitiveNeurosciences #DisruptiveTechnology#BridgingScienceTechnologyAndSecurity#WeCanDoThat! https://t.co/HURn9WKrEb

— Margaret E. Kosal (@mekosal) February 4, 2022

Bionic eye gets a new lease on life: Ex-Neuralink exec Max Hodak’s new company rescues Pixium technology. In August 2023's newsletter I mentionned adventures of Second Sight (an article by @meharris), the developer of Argus II retinal implant. Now, another implant developer required rescue. Here's a great overview of both Second Sight and Pixum Vision by @meharris. Below is a snippet:

There are important differences between Pixium’s and Second Sight’s systems. Despite being much smaller than Second Sight’s Argus II device, Pixium’s PRIMA has over six times as many electrodes, meaning it can produce a more detailed artificial image. And where the Argus II system sent visual data from the glasses to the implant via radio waves, PRIMA literally beams information from the glasses into the eye using near-infrared radiation. The same infrared signal also powers the tiny implant.

Another system, Intracortical Visual Prosthesis (ICVP), passed a two-years milestone, '... two years since [implantation], successful clinical testing has found the prosthesis provides study participants with an improved ability to navigate and perform basic, visually guided tasks.', an announcement states. A 400 electrodes system creates artificial vision by bypassing damaged optical pathways to directly stimulate the visual cortex.

This is how the quality of the artificial vision is described:

... “blips on a radar screen.” With the implant, [the patient] can perceive people and objects represented in white and iridescent dots.

@emilylmullin provides a deeper dive into ICVP and an overview on the wider landscape:

Brian Bussard went blind in 2016. In 2022, he had 25 tiny chips installed in his brain which give him a limited sense of sight.

— Emily Mullin (@emilylmullin) April 17, 2024

I wrote about the state of brain implants to "restore" vision: https://t.co/bGLpWDwaKf

It seem like some consolidation happens not only horizontally, when companies which work on various indications merge, but also vertically. In December 2023 newsletter I covered vertical integration in Precision and Science. Now, in order to ramp up brain implant production Synchron acquires an equity stake in a German manufacturer Acquandas, responsible for layering the metals that make up one component of the company’s implant. Coverage by @ashleycapoot.

Funding

If you missed this - 🇺🇸 Crypto company Tether has invested $200 million in Blackrock Neurotech, taking a majority stake in the U.S. brain implant company. Here is an analysis by @naveen101. I'd agree with Naveen's point on crypto establishing a 'broader strategic commitment to this industry'. I think ideologically crypto is close to BCIs, in terms of attention to privacy, community involvement, reliability, diversity.

🇬🇧 Neurovalens rasied £2.1M to help patients fight generalised anxiety disorder (GAD) with transcranial direct current stimulation (tDCS).

🇬🇧 Samphire Neuroscience rasied $2.3M to develop a tDCS system to ease menstrual pain and mood disorders.

🇫🇮 Sooma raises €5M to develop a treatment for depression using tDCS.

🇳🇱 MindAffect secures €1.1M funding to simplify hearing diagnostics through electroencephalogram (EEG) and AI.

🇺🇸 Helius Medical Technologies announces post-IPO $6.4M public offering. The system is for treating gait and balance deficit via translingual neurostimulation.

🇺🇸 Neurable raised $13M. It develops an electroencephalogram (EEG)-powered platform to track focus, prevent fatigue, and opens possibilities for device interaction.

🇺🇸 Gates Ventures joins Bionaut series B-2. The company develops tiny robots to assist with surgeries/therapies in hydrocephalus, dandy walker malformation, in neuro-oncology, and other indications.

🇺🇸 electroCore announces $9.3M registered pos-IPO direct offering . It developed a non-invasive vagus nerve stimulation (nVNS) system to help with cluster headaches, improve sleep, among other things.

🇮🇱 Wearable Devices announces $10 Million post-IPO equity purchase agreement. The company develops a electromyography (EMG)-powered input interface technology for the B2C and B2B markets.

🇺🇦 Esper Bionics raises a $5M investment. Esper manufactures bionic prosthetics powered by EMG.

You might notice several small/medium-size post-IPO funding rounds in various forms. To me, this is a sign that neurotech found it's product-market fit in some narrow/medical applications and brings value to patients and investgors. It, however, has yet not become a venture-fundable high-potential tech, similar to AI.

🇦🇹 Syntropic Medical secures early-stage funding. It develops light stimulation for treatment of mental health disorders starting from major depressive disorder (MDD).

🇺🇸 Sound and EEG are used by Elemind (raised $12 funding round) The device measures EEG and instantly delivers precise acoustic feedback to reach deeper sleep.

📬📬📬 Subscribe for a monthly update on neurotech and neurotech investment/commercialisation activity.