Intel’s Investments in cognitive tech: impact and new opportunities

This article was originally published on Medium, and scored 122 views.

Intel is the company which is enormously contributing to development of cognitive technologies. Being the leader of Top500 supercomputer sites, the firm shapes the future of high performance computing (HPC) and the wider ecosystem (Table 1). Analysis investment activity of Intel may help to spot emerging trends in cognitive tech.

To date Intel has finalized at least 20 transactions aiming to acquire or invest in assets directly or indirectly related to various areas of cognitive tech, including natural language processing, speech recognition, robotics and others. The largest bets were placed on assets that at first glance have no clear connection with cognitive technologies (e.g. interconnects). However, exactly these assets may shape the future of the cognitive tech (Table 2).

The Intel’s CEO Brian Krzanich has recently outlined the future of the company. Machine learning looks to be an integral part of his statement, even though it was mentioned only twice. Machine learning links some other pillars, mentioned by the CEO, namely cloud/data center, connected things and connectivity[1]. Data centers are places where machine learning takes place. The cloud is a medium to share outcomes of machine learning with connected things that may become active learners themselves. That is a story Intel may bring to life.

Intel’s investments and acquisitions are aligned with the recent CEO’s statement. For example, the company invests in infrastructure that makes data centers more cognitive tech friendly, e.g. field-programmable gate arrays (FPGAs) and interconnects. Intel also backs technologies that have a potential to bring new computing capabilities to connected things, including computer vision, speech recognition, advanced analytics.

A push towards machine learning by a chip giant opens new opportunities for the cognitive tech community. Better, and presumably cheaper, hardware for data centers leads to a faster adoption of cognitive technologies by corporates. Technological changes in hardware, for instance diversification of FPGAs’ in new verticals, create new challenges startups may find relevant to seek a solution for.

New Workloads in Data Centers and in the Cloud

In 2015, Intel made its largest acquisition by closing a transaction with Altera, a FPGAs manufacturer, valuing it around $17B. The reasoning behind the transaction and its timing are to be explored and understood by taking into account that back in 2010, Christopher Danely, the analyst of JP Morgan Securities, highlighted Intel’s considerations to acquire an FPGAs vendor[2]. The question is what motivated Intel to make this kind of a bold move in 2015.

A possible incentive to jump into the FPGA segment is that it is a new emerging market, when tech companies’ data centers are experiencing a new type of a workload. Workloads related to cognitive technologies, e.g. machine learning, speech and images recognition are becoming more notable, but not yet large though.

New types of workloads currently represent a marginal share of data centers’ work, however, it is expected to grow. For instance, the number of servers used for running deep learning at Microsoft ‘…is at most in the single digits percentage of all workload’[3]. In 2014, ~10% of search queries at Baidu were done with a voice. Voice queries are expected to achieve 50% threshold by 2020[4].

Some of the cognitive tech workloads are mature enough to be allocated to different types of servers. For example, powerful graphic processing units (GPUs) assist with neural networks training, and traditional central processors execute production workloads.

At the same time, a larger part of the deep learning network design is still ‘empirical’, demands much experimentation, larger-than-optimal datasets, and therefore more power. The question of process acceleration is still relevant.

Important thing to highlight is that the cloud is critical for companies that deal with new cognitive tech workloads. As current endpoints can hardly provide required processing power for such workloads as machine learning, delivering it through the cloud is deemed significant.

Unlike in the situation with traditional workloads, where the cloud is sometimes a ‘nice to have option’, the cloud is a ‘must have option’ for cognitive tech. For instance, one potentially may run a CRM on a desktop, without going to Salesforce for its cloud products. At the same time, training of a neural network is hardly possible without relying on powerful servers of a provider. Intel estimates that a third of cloud providers will be using FPGA accelerated server nodes by 2020[5].

As new types of workloads proliferate, customization of infrastructure also follows.

As Hamant Dhulla, the vice president of the data center division at Xilinx, a FPGAs maker, puts it, ‘[we] are seeing that these data centers are separated into ‘pods’ or multiple racks of servers for specific workloads. For instance, some have pods set aside to do things like image resizing, as an example…’[6]

Intel’s Push into FPGAs and Challenges Associated with it

Required level of specialization of data center hardware may be achieved via FPGAs. Eric Chung at Microsoft Research explained to Thenextplatform the rationale behind using FPGAs, its power profile and flexibility in particular.

According to Mr. Chung, FPGAs represent the balance ‘between something that is general purpose and specialized hardware’ even if its peak performance is lower than for GPUs, and there are difficulties to program it[7].

Microsoft for example uses FPGAs to speed up Bing[8]. Baidu was also experimenting with FPGAs in traditional search, image and speech recognition workloads[9].

One may expect that with Intel’s help Altera’s FPGA will diversify into new verticals and functions. For instance, FPGAs approach natural language processing, medical imaging or network packet processing. In some cases FPGAs are employed on a storage end, while in others they get some computing workloads[10].

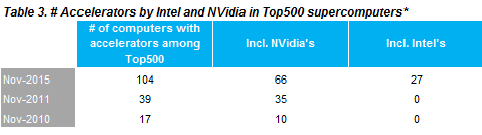

Intel’s push into FPGA does not seem to be a reactive answer to the market demand, but a logical move. During the last five years, Intel has established a strong position in Top500 list not only as a provider of CPUs, but as a provider of co-processors accelerating them. Intel’s Xeon Phi co-processors accelerated 27 out of 500 of the most powerful computers in November 2015 (Table 3). Having an understanding of the importance of hybrid computing, Intel has made a move into FPGAs.

However, there are concerns about using FGPAs for cognitive tech workloads. As of November 2015, there were no systems among Top500 the most powerful computers that were using FPGA acceleration.

Urs Hölzle, the senior vice president of the technical infrastructure team at Google, mentioned that FPGAs are more a ‘niche thing’ as they ‘… are much harder to program than a CPU… you use them in a place where you don’t have a choice’[11]. FPGA programming languages, Verilog and VHD, are not the easiest ones to learn and alternative solutions like OpenCL for Altera’s FPGAs and C for Xilinx’s FPGAs do not look optimal[12].

Moreover, in addition to challenges with programming, data movement and memory are the other concerns, when CPUs are accelerated by GPUs or FPGUs[13]. For example, a bottleneck appears at a PCI-express bus. NVidia explains: ‘…GPUs are connected to x86-based CPUs through the PCI Express (PCIe) interface, which limits the GPU’s ability to access the CPU memory system and is four- to five-times slower than typical CPU memory systems[14]. Connecting of FPGAs with CPUs is also challenging[15].

Both challenges, programming of FPGAs and data movement/memory, are on Intel’s radar and agenda.

For tackling the programming challenge, Intel develops a suite of FPGA libraries, including standard acceleration for cloud, networking and traditional enterprise. Machine learning applications are also considered as interesting for the company. Intel expects to bolster programmability and cloud use of FPGAs via the suite [16].

Technologies acquired with Interconnect Technology Business of Cray and InfiniBand Business of QLogic may be helpful in integrating CPUs with FGPAs and solving data flows/memory issues[17].In the future, Intel expects to locate FPGA and CPU on one single die. In addition, Intel seems to have capabilities required to develop clusters with FPGAs based on a remote direct memory access (RDMA) via InfiniBand.

Improving capability in data center business is not the only aim of Intel’s investment activity. Intel has acquired Xtremeinsights, a consulting company, in order to promote Intel’s distribution of Apache Hadoop used in advanced analytics and machine learning[18].

Moreover, the company acquired assets in navigation, computer vision and natural language processing/speech recognition. These acquisitions are important for executing Intel’s strategy in connected things.

Connected Things

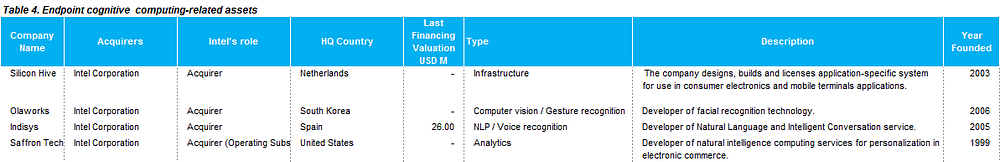

Unloading some computing on the endpoint looks to be an aim of Intel’s investment in the connected things area (Table 4).

Yann LeCun, the director of AI research at Facebook, highlights the challenge of computing on device, which Intel seemed to have noticed as well[19]. Running cognitive tech workloads on endpoints is an emerging trend as it facilitates to compute local/transfer energy trade-offs[20], provides low latency and can solve concerns over privacy by minimizing exposure of sensitive data to the external world. Therefore, one may suggest that system-on-chip (SoC) accelerators for cognitive functions could be common in the nearest future.

By pursuing local computing, Intel has acquired Saffron, a cognitive computing platform provider, Silicon Hive, a developer of a tool for programming SoC components, and Olaworks, a mobile face recognition company. Indisys, a natural language processing company, presumably, was also acquired with a purpose of integrating its technologies into Intel’s chips[21].

Intel believes that Saffron’s technologies ‘…deployed on small devices, can make intelligent local analytics possible in the Internet of Things’[22]. Moreover, it was rumored that combination of tech behind Olaworks and Silicon Hive may help Intel with moving face-recognition workloads on mobile[23].

Also, the company made at least 12 acquisitions in computer vision, speech recognition, navigation and robotics (Table 5). Some assets are integrated in Intel’s structures, for instance, Telmap, which was acquired for $300M. Alternatively, acquired technologies are implemented into Intel’s products (e.g. Omek Interactive became a part of RealSense platform) or operate independently (e.g. Nixie Labs, Open Bionics, Babybe).

Conclusion

Intel’s move into machine learning is a good sign for companies involved in the cognitive tech as well as it outlines a new opportunity for startups.

Being a company with deep pockets and a market power, Intel may propel adoption of the cognitive tech by offering more affordable hardware and building the community of developers around it. With Intel’s support, FPGA’s adoption will likely be increased and use-cases of technology will diversify.

Intel’s investments also indicate where money is likely to move to in the future, e.g. into cognitive computing in the cloud and on a device.

Challenges associated with Intel’s strategy also open opportunities for cognitive tech companies.

For instance, solving issues of integration of CPUs with FPGAs and programming of FPGAs may become a foundation for emerging new businesses.

Update: a week after the post was published, Intel has announced the acquisition of Itseez, a computer vision company. Previously Intel already has aquired at least three companies in the field, namely Olaworks, Omek Interactive and CognoVision Solutions. What is special about Itseez is that the company is focused not only on computer vision itself but also on its ‘ …implementations for embedded and specialized hardware’ [24].

‘Itseez will become a key ingredient for Intel’s Internet of Things Group (IOTG)…’ and empower Intel’s move into connected things market, highlighted in the post above [24].

I would like to thank all of those who have helped me with the note, especially its technical aspects.

***

[1] https://newsroom.intel.com/editorials/brian-krzanich-our-strategy-and-the-future-of-intel/

[2] http://electronicsb2b.efytimes.com/intel-altera-synthetic-processors-2/

[3] http://www.nextplatform.com/2015/08/27/microsoft-extends-fpga-reach-from-bing-to-deep-learning/

[4] http://www.fastcompany.com/3035721/elasticity/baidu-is-taking-search-out-of-text-era-and-taking-on-google-with-deep-learning

[5] http://www.forbes.com/sites/kurtmarko/2015/06/09/intel-fpga-software/#891fd4b75bf6To add link

[6] http://www.nextplatform.com/2015/11/17/fpgas-glimmer-on-the-hpc-horizon-glint-in-hyperscale-sun/

[7] http://www.nextplatform.com/2015/08/27/microsoft-extends-fpga-reach-from-bing-to-deep-learning/

[8] https://gigaom.com/2014/08/14/researchers-hope-deep-learning-algorithms-can-run-on-fpgas-and-supercomputers/

[9] http://www.pcworld.com/article/2464260/microsoft-baidu-find-speedier-search-results-through-specialized-chips.html

[10] http://www.nextplatform.com/2015/03/24/fpga-market-floats-future-on-the-cloud/

[11] http://www.nextplatform.com/2015/04/29/google-will-do-anything-to-beat-moores-law/

[12] http://www.nextplatform.com/2015/05/14/fpgas-edging-closer-to-the-enterprise-starting-line/

[13] http://www.nextplatform.com/2015/05/14/fpgas-edging-closer-to-the-enterprise-starting-line/

[14] http://arstechnica.com/information-technology/2014/03/nvidia-and-ibm-create-gpu-interconnect-for-faster-supercomputing/

[15] See http://www.extremetech.com/extreme/184828-intel-unveils-new-xeon-chip-with-integrated-fpga-touts-20x-performance-boosthttp://www.eejournal.com/archives/articles/20140902-socfpgas/

[16] http://www.nextplatform.com/2016/03/14/intel-marrying-fpga-beefy-broadwell-open-compute-future/

[17] http://www.nextplatform.com/2016/03/14/intel-marrying-fpga-beefy-broadwell-open-compute-future/

[18] https://newsroom.intel.com/chip-shots/chip-shot-intel-acquires-hadoop-consulting-firm-xtremeinsights/

[19] http://www.nextplatform.com/2015/05/11/deep-learning-pioneer-pushing-gpu-neural-network-limits/

[20] Sometimes it’s cheaper energy-wise to compute locally than to transfer data thru wireless

[21] http://www.wired.com/2015/10/intel-is-building-artificial-smarts-right-into-its-chips/

[22] https://blogs.intel.com/technology/2015/10/intel-acquires-saffron-for-cognitive-computing/

[23] http://www.spimewrangler.com/blog/current-affairs/intels-first-full-acquisition-of-korean-firm-olaworks/

[24] https://newsroom.intel.com/editorials/intel-acquires-computer-vision-for-iot-automotive/

[*] Sources:

http://www.top500.org/lists/2015/11/highlights/http://www.top500.org/lists/2005/06/highlights/http://www.theregister.co.uk/2003/06/23/intel_powers_more_top500_supercomputers/http://www.theregister.co.uk/2003/06/23/intel_powers_more_top500_supercomputers/